CSGO Chronicles: Unfolding the Gaming Universe

Dive into the latest news, tips, and trends in the world of Counter-Strike: Global Offensive.

When Algorithms Have Opinions: The Quirky Side of Machine Learning

Uncover the quirky side of machine learning! Discover how algorithms form surprising opinions and their impact on our digital world.

How Do Algorithms Develop Opinions? An Inside Look at Machine Learning Decisions

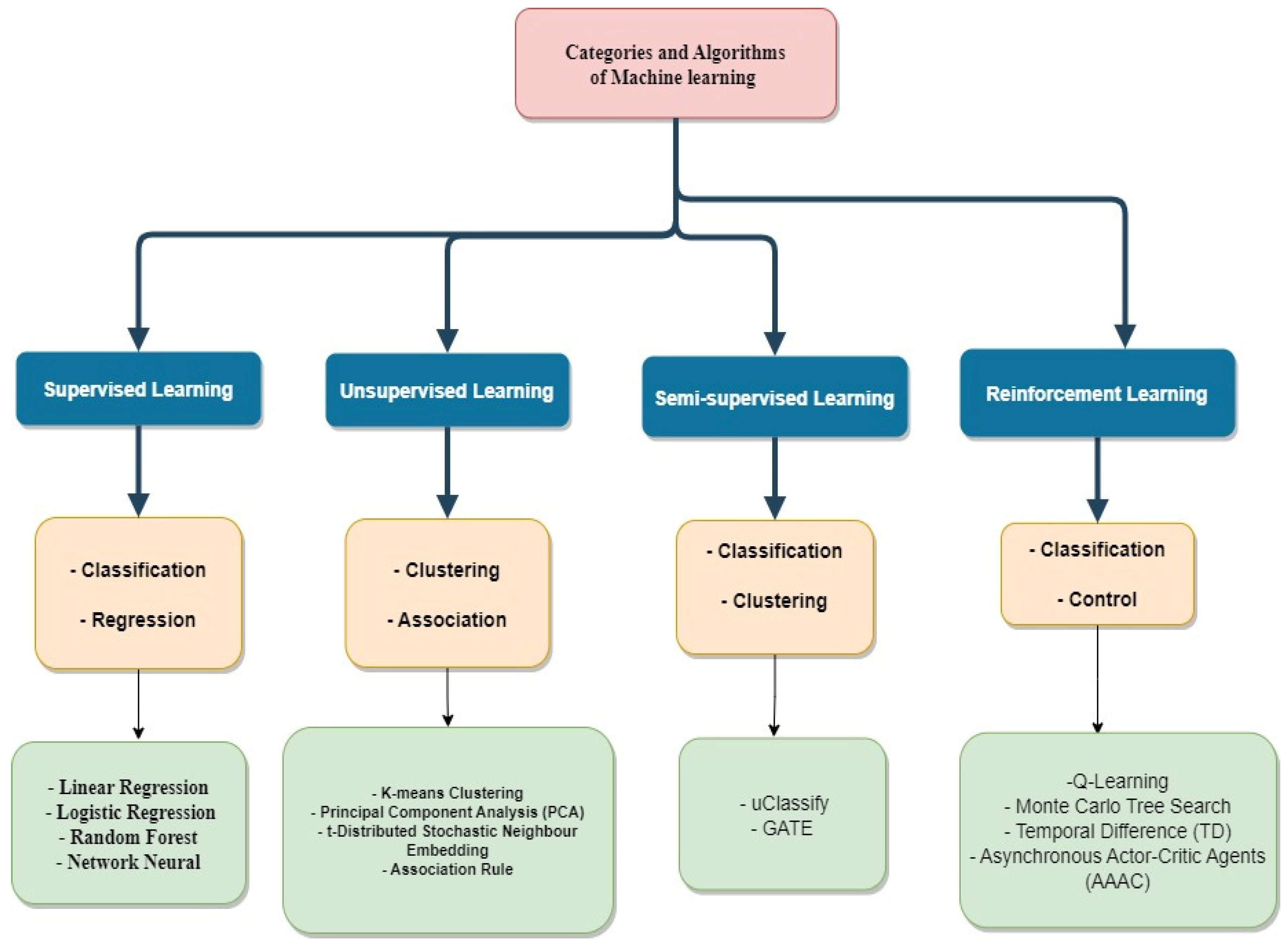

The development of opinions by algorithms is primarily rooted in the complex processes of machine learning. At its core, machine learning involves feeding vast amounts of data into algorithms, which then analyze patterns and relationships within the data. This process typically consists of several steps, including data collection, preprocessing, and model training. During training, the algorithm uses techniques such as supervised learning, where it learns from labeled data, or unsupervised learning, where it identifies patterns without explicit labels. These methods enable algorithms to form a sort of 'opinion' based on the correlations and insights derived from the data.

Once the model is trained, it can make predictions or decisions that reflect what it has learned, akin to an opinion. However, the reliability of these machine learning decisions depends largely on the quality and quantity of the data provided. Factors such as biases in the data, the choice of algorithms, and the parameters set during training can all influence the algorithm's output. As a result, while algorithms can develop opinions based on data-driven analysis, they are not infallible and can exhibit unexpected behaviors if not properly managed.

The Quirky Misadventures of AI: Funny Stories from the World of Machine Learning

In the rapidly evolving realm of machine learning, one would assume that artificial intelligence is all about precision and data crunching. However, the quirky misadventures of AI remind us that humor often lurks behind the algorithms. Take, for example, a commonly referenced incident where an AI trained to recognize dogs hilariously misidentified a loaf of bread as a fluffy corgi! Such situations highlight the unexpected outcomes of training models on biased data, leading to countless bloopers that can bring smiles to even the sternest of data scientists.

Another amusing episode took place when a language model was asked to generate captions for various images. Instead of producing insightful descriptions, it insisted on labeling pictures of solemn landscapes as 'The World's Chillest Party' and family gatherings as 'Ultimate Nap Time.' These moments perfectly encapsulate the humorous side of AI - a reminder that while machines strive for intelligence, they can sometimes deliver pure comedy gold by misinterpreting context and meaning in the most delightful ways.

Can Algorithms Be Biased? Understanding the Quirks of Data-Driven Opinions

The question of bias in algorithms has gained significant attention in recent years as reliance on data-driven technologies increases. Algorithms, which serve as the backbone for decision-making in various domains—from hiring practices to criminal sentencing—are only as good as the data they are trained on. If the data reflects historical biases or societal inequalities, the outputs generated by these algorithms can perpetuate or even exacerbate existing disparities. This phenomenon is not merely a technical issue; it underscores the ethical implications of allowing machines to shape opinions and actions that affect real lives.

A key aspect of understanding how algorithms can be biased lies in recognizing how they process information. Algorithms operate through complex mathematical models that rely on vast amounts of data. However, if the data fed into these models is incomplete or skewed, the results can be misleading. For example, consider a situation where an algorithm is trained on a dataset that predominantly features one demographic. The algorithm may develop a preference for that group, leading to unfair outcomes in its applications. Thus, it is crucial to not only improve the transparency and accountability of these systems but also to ensure that they are continuously audited and re-evaluated to mitigate bias.